This is the 50th article in the award-winning “Real Words or Buzzwords?” series about how real words become empty words and stifle technology progress.

By Ray Bernard, PSP, CHS-III

Examining the terms involved in camera stream configuration settings and why they are important

All-in-one RWOB

MAXIMIZE YOUR SECURITY OPERATIONS CAPABILITIES

Upgrade your security operations effectiveness through Security Technology Strategic Planning. Provably get more for your company's security technology investment.

★ ★ ★ GET NOTIFIED! ★ ★ ★

SIGN UP to be notified by email the day a new Real Words or Buzzwords? article is posted!

Real Words or Buzzwords?

The Award-Winning Article Series

#1 Proof of the buzzword that killed tech advances in the security industry—but not other industries.

#2 Next Generation (NextGen): A sure way to tell hype from reality.

#3 Customer Centric: Why all security industry companies aren't customer centric.

#4 Best of Breed: What it should mean to companies and their customers.

#5 Open: An openness scale to rate platforms and systems

#6 Network-friendly: It's much more than network connectivity.

#7 Mobile first: Not what it sounds like.

#8 Enterprise Class (Part One): To qualify as Enterprise Class system today is world's beyond what it was yesterday.

#9 Enterprise Class (Part Two): Enterprise Class must be more than just a top-level label.

#10 Enterprise Class (Part Three): Enterprise Class must be 21st century technology.

#11 Intuitive: It’s about time that we had a real-world testable definition for “intuitive”.

#12 State of the Art: A perspective for right-setting our own thinking about technologies.

#13 True Cloud (Part One): Fully evaluating cloud product offerings.

#14 True Cloud (Part Two): Examining the characteristics of 'native-cloud' applications.

#15 True Cloud (Part Three): Due diligence in testing cloud systems.

#16 IP-based, IP-enabled, IP-capable, or IP-connectable?: A perspective for right-setting our own thinking about technologies.

#17 Five Nines: Many people equate high availability with good user experience, yet many more factors are critically important.

#18 Robust: Words like “robust” must be followed by design specifics to be meaningful.

#19 Serverless Computing – Part 1: Why "serverless computing" is critical for some cloud offerings.

#20 Serverless Computing – Part 2: Why full virtualization is the future of cloud computing.

#21 Situational Awareness – Part 1: What products provide situational awareness?

#22 Situational Awareness – Part 2: Why system designs are incomplete without situational awareness?

#23 Situational Awareness – Part 3: How mobile devices change the situational awareness landscape?

#24 Situational Awareness – Part 4: Why situational awareness is a must for security system maintenance and acceptable uptime.

#25 Situational Awareness – Part 5: We are now entering the era of smart buildings and facilities. We must design integrated security systems that are much smarter than those we have designed in the past.

#26 Situational Awareness – Part 6: Developing modern day situational awareness solutions requires moving beyond 20th century thinking.

#27 Situational Awareness – Part 7: Modern day incident response deserves the help that modern technology can provide but doesn’t yet. Filling this void is one of the great security industry opportunities of our time.

#28 Unicity: Security solutions providers can spur innovation by envisioning how the Unicity concept can extend and strengthen physical access into real-time presence management.

#29 The API Economy: Why The API Economy will have a significant impact on the physical security industry moving forward.

#31 The Built Environment: In the 21st century, “the built environment” means so much more than it did just two decades ago.

#32 Hyper-Converged Infrastructure: Hyper-Converged Infrastructure has been a hot phrase in IT for several years, but do its promises hold true for the physical security industry?

#33 Software-Defined: Cloud-computing technology, with its many software-defined elements, is bringing self-scaling real-time performance capabilities to physical security system technology.

#34 High-Performance: How the right use of "high-performance" can accelerate the adoption of truly high-performing emerging technologies.

#35 Erasure Coding: Why RAID drive arrays don’t work anymore for video storage, and why Erasure Coding does.

#36 Presence Control: Anyone responsible for access control management or smart building experience must understand and apply presence control.

#37 Internet+: The Internet has evolved into much more than the information superhighway it was originally conceived to be.

#38 Digital Twin: Though few in physical security are familiar with the concept, it holds enormous potential for the industry.

#39 Fog Computing: Though commonly misunderstood, the concept of fog computing has become critically important to physical security systems.

#40 Scale - Part 1: Although many security-industry thought leaders have advocated that we should be “learning from IT,” there is still insufficient emphasis on learning about IT practices, especially for large-scale deployments.

#41 Scale - Part 2: Why the industry has yet to fully grasp what the ‘Internet of Things’ means for scaling physical security devices and systems.

#42 Cyberspace - Part 1: Thought to be an outdated term by some, understanding ‘Cyberspace’ and how it differs from ‘Cyber’ is paramount for security practitioners.

#43 Cyber-Physical Systems - Part 1: We must understand what it means that electronic physical security systems are cyber-physical systems.

#44 Cyberspace - Part 2: Thought to be an outdated term by some, understanding ‘Cyberspace’ and how it differs from ‘Cyber’ is paramount for security practitioners.

#45 Artificial Intelligence, Machine Learning and Deep Learning: Examining the differences in these technologies and their respective benefits for the security industry.

#46 VDI – Virtual Desktop Infrastructure: At first glance, VDI doesn’t seem to have much application to a SOC deployment. But a closer look reveals why it is actually of critical importance.

#47 Hybrid Cloud: The definition of hybrid cloud has evolved, and it’s important to understand the implications for physical security system deployments.

#48 Legacy: How you define ‘legacy technology’ may determine whether you get to update or replace critical systems.

#49 H.264 - Part 1: Examining the terms involved in camera stream configuration settings and why they are important.

#50 H.264 - Part 2: A look at the different H.264 video frame types and how they relate to intended uses of video.

#51 H.264 - Part 3: Once seen as just a marketing term, ‘smart codecs’ have revolutionized video compression.

#52 Presence Technologies: The proliferation of IoT sensors and devices, plus the current impacts of the COVID-19 pandemic, have elevated the capabilities and the importance of presence technologies.

#53 Anonymization, Encryption and Governance: The exponential advance of information technologies requires an exponential advance in the application of data protection.

#54 Computer Vision: Why a good understanding of the computer vision concept is important for evaluating today’s security video analytics products.

#55 Exponential Technology Advancement: The next 10 years of security technology will bring more change than in the entire history of the industry to now.

#56 IoT and IoT Native: The next 10 years of security technology will bring more change than in the entire history of the industry to now.

#57 Cloud Native IoT: A continuing look at what it means to have a 'True Cloud' solution and its impact on today’s physical security technologies.

#58 Bluetooth vs. Bluetooth LE: The next 10 years of security technology will bring more change than in the entire history of the industry to now.

#59 LPWAN - Low-Power Wide Area Networks: Emerging IoT smart sensor devices and systems are finding high-ROI uses for building security and safety.

#60 Edge Computing and the Evolving Internet: Almost 15 billion personal mobile devices and over 22 billion IoT devices operating daily worldwide have shifted the Internet’s “center of gravity” from its core to its edge – with many implications for enterprise physical security deployments

#61 Attack Surface: (Published as a Convergence Q&A Column article)An attack surface is defined as the total number of all possible entry points for unauthorized access into any system.

#62 Autonomous Compute Infrastructure: We’re on the brink of a radical new approach to technology, driven by autonomous operations.

#63 Physical Security Watershed Moment: We have reached a juncture in physical security technology that is making most of our past thinking irrelevant.

#64 Access Chaos: For 50 years we have had to live with physical access control systems that were not manageable at any large scale.

#65 AI and Automatiom: Will engineering talent, business savvy and capital investment from outside the physical security industry bring technology startups that transform reactive security to proactive and preventive security operations?

#66 Interoperability: Over the next five years, the single greatest determinant of the extent to which existing security industry companies will thrive or die is interoperability.

#67 AI Model : One key factor affects the accuracy, speed and computational requirements of AI

#68 Interoperability – Part 2: There are two types of security system interoperability – both of which are important considerations in the design of security systems and the selection of security system products.

#69 Interoperability – Part 3: There are two types of security system interoperability – both of which are important considerations in the design of security systems and the selection of security system products.

#70 Operationalizing AI: AI is not a product, but a broad category of software that enables products and systems to do more than ever before possible. How do we put it to good use?

#71 Shallow IT Adoption – Part 1: It’s not just about being IT compliant, it’s also about leveraging IT capabilities to properly serve the needs and wants of today’s technologically savvy customers.

#72 E-waste – an important security system design issue: Now e-waste is an important design issue not just because of growing e-waste regulations, but because educated designers can save enterprise security system customers a lot of money.

#73 LRPoE - Long Reach Power over Ethernet: A dozen factors have improved the business attractiveness of network cameras, making it more desirable to place cameras further from existing IT closets than the 328 foot limitation of standard Ethernet cable.

#74 NIST Declares Physical Access Control Systems are OT: Does it really mean anything that OT has joined the parade of labels (IT, IoT, and then IIoT) variously getting applied to security systems?

#75 Future Ready: Google sees the term "future-ready" trending up across many subject domains. But does that term apply to the physical security industry and its customers?

#76 Data KLiteracy: AI needs data. Thus, the ability of any department or division in an organization (including security) to use AI effectively depends on its ability to effectively obtain and utilize data – including security.

#77 Security Intelligence (upcoming): AI brings two kinds of intelligence to physical security systems – people bring the third.

More to come about every other week.

The two overall reasons to have a clear understanding of these video compression issues is that they impact video system cost and performance:

- Higher levels of compression reduce network bandwidth requirements and video storage requirements, but increase video server processing requirements. In some deployments, this has degraded video quality when camera counts and/or higher compression levels have increased to the point where excessive server CPU processing demands result in dropped video frames and video image artifacts.

- It’s often easier to upgrade video server hardware than it is to expand network capacity, especially in the case where some or all of the video traffic is being carried by a corporate business network, rather than a physically separate network for video surveillance and other security applications. To determine the server requirements requires a good understanding of the levels of CPU processing power that will be required over the intended life of the server – recommended to be only two to three years, given the increasingly rapid advancements in server and GPU hardware.

Today’s Codecs

The previous generation of video codec technology (codec being short for coding/decoding) dealt with improvements in compressing each video image, but still sent the visually same image over and over again when the scene the camera was viewing didn’t change. This single-image compression is called spatial compression – compression that analyses blocks of space within a video image to determine how they can be represented using less data. Some of the color and shading nuances in an image can’t be detected by the human eye. They can, for example, be averaged, without reducing the useful quality of the image.

Modern codecs use temporal compression – which is compression based on how a sequence of images vary over time. Only the changes from one video frame to the next are transmitted. If a bird flies across a clear blue sky, only the small areas where the bird is and was need to be updated.

These types of compression are referred to as lossy compression, because the decoded (i.e. rebuilt) video images are not identical to the original image. The less identical they are, the worse the video quality is, but also the lower the total amount of information is that’s transmitted and stored. This is where the trade-offs occur between video quality and the requirements for network bandwidth and storage.

Consumer Video Impacts

H.264 uses both types of compression – spatial and temporal – and has become the predominant type of video compression in the video industry, which includes online video streaming and television video broadcast. It is these highly profitable commercial uses of video that have driven the advances in video compression. The physical security industry does not drive these advances – the consumer video industry does.

Thus, you can find all kinds of advice online about configuring camera video for H.264 video compression – but only some of it applies to security video. The fact that security video is used for investigation purposes and courtroom evidence gives it quality requirements that differ from those of entertainment video. A primary consideration for commercial video is, “What can we fit into a single video disc?”

Security Video Considerations

The primary considerations regarding security video are the cost of the video system infrastructure (video transmission and storage) and the quality of the video evidence. Today, quality is no longer driven just by visual evidence image requirements. Ongoing advancements in AI-based video analytics are adding new requirements to video collection, with several new industry entrants saying that full facility situational awareness requires applying analytics to all cameras all the time, to provide a sound basis for anomaly detection. Video tracking of people and objects requires not just having evidence of the area where a critical asset was compromised, or an individual was hurt, but coverage of the entry and exit travel paths of all the individuals involved, including witnesses.

Video analytics for retail operations require much more detailed coverage of store merchandise displays, and 100% coverage of customer traffic areas with sufficient quality to determine customer facial responses so that AI can identify frustration, displeasure, or delight resulting from merchandise and promotional displays. Retail video is just one of the directions where video applications are going, and there are many of them for industrial/occupational safety, security and business operations reasons.

Need for Understanding Video Compression

Thus, individuals responsible for selecting video technology, as well as those deploying it, need to understand how video compression works and what the quality requirements are for all the various intended uses of the video. It also impacts camera placement and lighting design, which is why I included links to video quality guides in the previous article.

I-Frames, B-Frames and P-Frames

The term “frame” has been historically used for animation and video still images, to refer to the single snapshot in time that it presents. Today, “frame” is even more accurate than “image” because image most accurately refers not to what the camera’s sensor saw, or the compressed full or partial video image being transmitted, but to what the end user sees when viewing a video clip or still image.

In this article we’ll look at what these different H.264 video frames types are and how they relate to the intended uses of video. In the next article we’ll examine smart codecs and their significance to the different uses of video.

I-Frames

I-frame is short for intra-coded frame, meaning that the compression is done using only the information contained within that frame, the way a JPEG image is compressed. Intra is Latin for within. I-frames are also called keyframes, because each one contains the full image information. This is spatial compression.

P-Frames

P-frame is short for predicted frame and holds only the changes in the image from the previous frame. This is temporal compression. Except for video with high amounts of scene change, the approach of combining I-frames and P-frames can result in compression levels between 50% and 90% compared to send sending only I-frames. One significance of this is that higher frame rates can be supported, which improves the quality of the video. Many megapixel cameras today can transmit 60 frames per second whereas 10 years ago, 5 frames per second was a common limit for megapixel cameras.

B-Frames

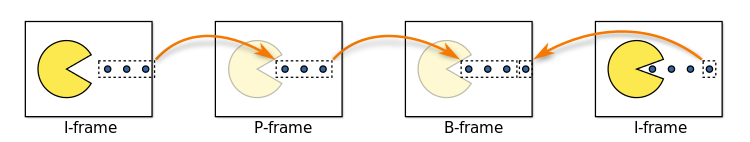

B-frame is short for bidirectional predicted frame, because it uses differences between the current frame and both the preceding and following frames to determine its content. Figure 1 from Wikipedia shows the relationships between the frame types.

Figure 1. H.264 Frame Types

Image source: Wikimedia commons

The more P-frames and B-frames there are between I-frames, the greater the compression. Also, the greater the possibility of video image loss for the time interval between I-frames. For video conferencing, for example, you might have only one I-frame every five or ten seconds, which means the loss of a single I-frame could result in the loss of video for several seconds, as the reference for the following P-frames wouldn’t exist. Typically, a video conferencing system would simply display the last-received I-frame until the next I-frame was received, and that would have minimal impact on the conference call. The loss of many seconds of security video could be a different story. In the Figure 2 illustration below the blue arrows from the b-frames point only to i-frames and p-frames.

Figure 2 below shows what the frame sequence might be for a 6-frame per second video stream with one I-frame per second.

Figure 1. H.264 Frame Types

Image source: Wikimedia commons

Image source: Wikimedia commons

The Wikipedia Inter frame article explains some important aspects of this compression encoding:

Because video compression only stores incremental changes between frames (except for keyframes), it is not possible to fast forward or rewind to any arbitrary spot in the video stream. That is because the data for a given frame only represents how that frame was different from the preceding one. For that reason, it is beneficial to include keyframes at arbitrary intervals while encoding video.

For example, a keyframe may be output once for each 10 seconds of video, even though the video image does not change enough visually to warrant the automatic creation of the keyframe. That would allow seeking within the video stream at a minimum of 10-second intervals. The down side is that the resulting video stream will be larger in size because many keyframes are added when they are not necessary for the frame’s visual representation. This drawback, however, does not produce significant compression loss when the bitrate is already set at a high value for better quality (as in the DVD MPEG-2 format).

This is one reason why Eagle Eye’s cloud VMS marks selected frames as key frames for its search function – it facilitates better forward and backward video searching while still allowing the original video stream size to be small by containing fewer I-frames.

Hanwah Techwin America provides a very informative and well-illustrated PDF presentation file titled H.265 vs. H.264 that discusses many of the points in this article and relates them to video settings and video quality.

Group of Pictures (GOP) / Group of Video Pictures (GOV)

These two terms come from the MPEG video compression standards, and for purposes of this discussion mean the same thing. A Group of Pictures begins with an I-frame, followed by some number of P-frames and B-frames. Figure 2 above shows a video stream with a GOP length of six: one I-frame followed by five P-frames and B-frames. Most security video documentation uses GOP Length and GOV Length interchangeably, which only causes confusion if you don’t know about it and the camera settings say “GOV Length” while the VMS settings say “GOP Length”. So, a shorter GOP length results in more I-frames, a longer GOP length results in fewer I-frames and greater compression.

Quality Implications of Frame Types

Video encoding includes methods that average out the properties of very small blocks of pixels in the image. Earlier versions of video standards have stricter requirements relating to the types of compression, such as averaging, that can be used for encoding the pixel blocks in each frame type. Later standards allow more compression options.

H.264 Slices

What’s also significant about H.264 is that the standard introduced the idea of separately encoded “slices”. A slice is a spatially distinct region of a frame that is encoded separately from any other region in the same frame. I-slices, P-slices, and B-slices take the place of I, P, and B frames.

That part of the standard is what has led to smart codecs, where the type of compression used – such as high, medium or low quality – can vary within the same frame. Thus, for example, a plain wall can receive high-compression low-quality treatment, while the person walking in front of the wall gets low-compression high-quality treatment.

Smart codecs utilize multiple types of compression within a single image, providing higher quality and higher compression for each type of frame, depending, of course, on the content of the frame.

The next article in this series will provide more detail on smart codecs, which is important, because the “smartness” of such codecs can vary significantly between vendors and even within a vendor’s own camera line.

About the Author:

Ray Bernard, PSP CHS-III, is the principal consultant for Ray Bernard Consulting Services (RBCS), a firm that provides security consulting services for public and private facilities (www.go-rbcs.com). In 2018 IFSEC Global listed Ray as #12 in the world’s top 30 Security Thought Leaders. He is the author of the Elsevier book Security Technology Convergence Insights available on Amazon. Mr. Bernard is a Subject Matter Expert Faculty of the Security Executive Council (SEC) and an active member of the ASIS International member councils for Physical Security and IT Security. Follow Ray on Twitter: @RayBernardRBCS.